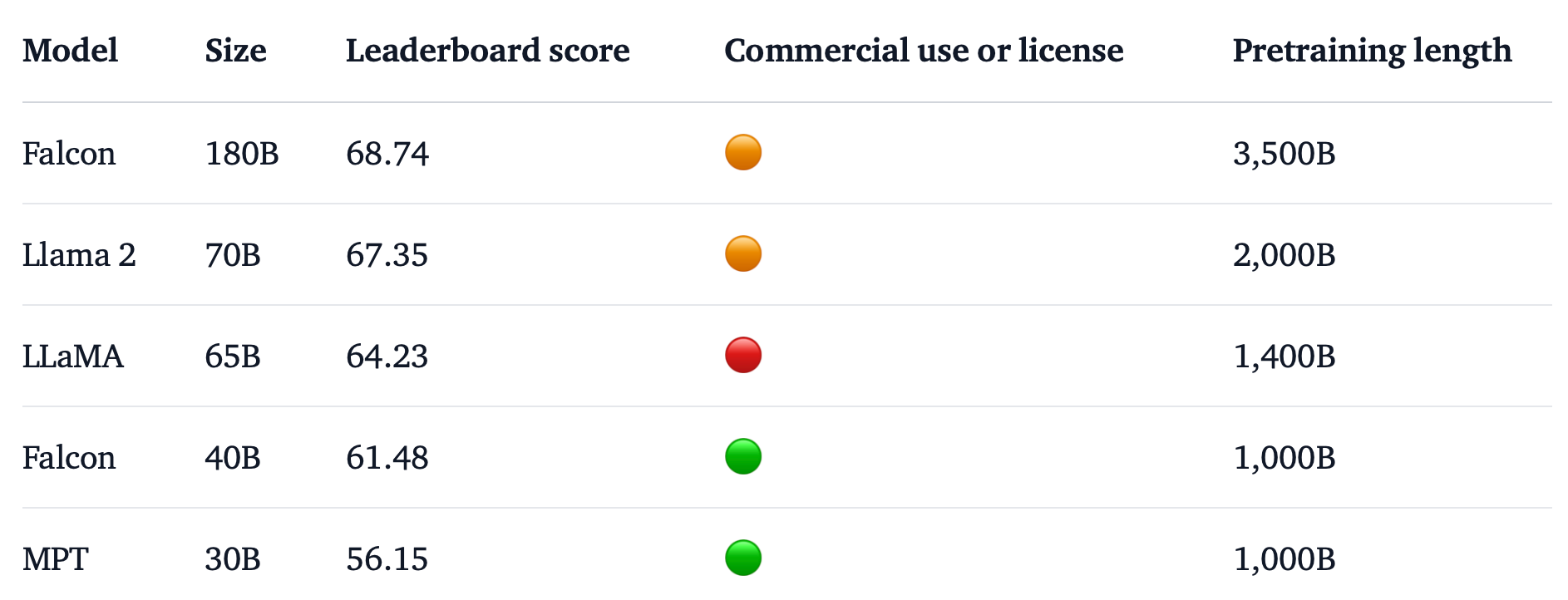

Falcon 180B, an open-source large language model, with a staggering 180 billion parameters, has made waves in the AI community. It surpasses previous models, achieves high scores in benchmark tests, and sits between GPT 3.5 and GPT4 in performance.

The

artificial intelligence community has a new reason to celebrate with the

introduction of Falcon 180B, an open-source large language model (LLM) that

boasts an impressive 180 billion parameters. This powerful newcomer has raised

the bar for open-source LLMs and achieved remarkable results in various

benchmarks.

Falcon 180B,

recently announced by the Hugging Face AI community, is now available on the

Hugging Face Hub. This LLM's architecture builds upon the Falcon series of

open-source LLMs, incorporating innovations such as multiquery attention to

reach its remarkable scale of 180 billion parameters, trained on a staggering

3.5 trillion tokens.

What sets

Falcon 180B apart is its record-setting single-epoch pretraining. This involved

the simultaneous use of 4,096 GPUs for approximately 7 million GPU hours,

utilizing Amazon SageMaker for training and fine-tuning. The sheer size of

Falcon 180B's parameters is staggering, measuring 2.5 times larger than Meta's

LLaMA 2, which was previously considered one of the most capable open-source

LLMs.

We're

now on WhatsApp. Click

to join

Future AI

advancements, Falcon 180B's achievements

Falcon 180B's

achievements extend beyond its size. It has surpassed LLaMA 2 and other models

in terms of scale and benchmark performance across a range of natural language

processing (NLP) tasks. In the open-access models leaderboard, it scores an

impressive 68.74 points, nearly reaching the performance levels of commercial

models like Google's PaLM-2 in evaluations like the HellaSwag benchmark.

Falcon 180B's

performance matches or exceeds Google's PaLM-2 Medium on various commonly used

benchmarks, including HellaSwag, LAMBADA, WebQuestions, Winogrande, and more.

This is a remarkable feat for an open-source model, demonstrating its

exceptional capabilities even when compared to solutions developed by industry

giants.

Falcon

180B v/s ChatGPT

In a

comparison with ChatGPT, Falcon 180B emerges as a more powerful option than the

free version but slightly less capable than the paid "plus" service.

It typically falls somewhere between GPT 3.5 and GPT4, depending on the

evaluation benchmark.

The release

of Falcon 180B signifies a significant leap in the rapid progress of large

language models. Beyond just scaling up parameters, advanced techniques like

LoRAs, weight randomization, and Nvidia's Perfusion have contributed to more

efficient training of these models.

With Falcon

180B now freely accessible on Hugging Face, researchers anticipate that the

model will see further enhancements and refinements from the community. Its

impressive natural language capabilities right from the start mark an exciting

development for open-source AI, showcasing the potential for collaborative

advancements in the field. Falcon 180B is poised to inspire further innovations

and discoveries in the world of artificial intelligence.

Exciting news! Knowledgeily

is now on WhatsApp Channels Subscribe today by clicking the link and stay

updated with the latest Blogs! Click here!

Most Read:

Zoom Launch “AI Companion” Feature To Elevate Productivity

And Enriched Meeting Experience